Howdy, folks—I’m Lee, and I do all the server admin stuff for Space City Weather. I don’t post much—the last time was back in 2020—but the site has just gone through a pretty massive architecture change, and I thought it was time for an update. If you’re at all interested in the hardware and software that makes Space City Weather work, then this post is for you!

If that sounds lame and nerdy and you’d rather hear more about this June’s debilitating heat wave, then fear not—Eric and Matt will be back tomorrow morning to tell you all about how much it sucks outside right now. (Spoiler alert: it sucks a whole lot.)

The old setup: physical hosting and complex software

For the past few years, Space City Weather has been running on a physical dedicated server at Liquid Web’s Michigan datacenter. We’ve utilized a web stack made up of three major components: HAProxy for SSL/TLS termination, Varnish for local cache, and Nginx (with php-fpm) for serving up Wordpress, which is the actual application that generates the site’s pages for you to read. (If you’d like a more detailed explanation of what these applications do and how they all fit together, this post from a couple of years ago has you covered.) Then, in between you guys and the server sits a service called Cloudflare, which soaks up most of the load from visitors by serving up cached pages to folks.

It was a resilient and bulletproof setup, and it got us through two massive weather events (Hurricane Harvey in 2017 and Hurricane Laura in 2020) without a single hiccup. But here’s the thing—Cloudflare is particularly excellent at its primary job, which is absorbing network load. In fact, it’s so good at it that during our major weather events, Cloudflare did practically all the heavy lifting.

With Cloudflare eating almost all of the load, our fancy server spent most of its time idling. On one hand, this was good, because it meant we had a tremendous amount of reserve capacity, and reserve capacity makes the cautious sysadmin within me very happy. On the other hand, excess reserve capacity without a plan to utilize it is just a fancy way of spending hosting dollars without realizing any return, and that’s not great.

Plus, the hard truth is that the SCW web stack, bulletproof though it may be, was probably more complex than it needed to be for our specific use case. Having both an on-box cache (Varnish) and a CDN-type cache (Cloudflare) sometimes made troubleshooting problems a huge pain in the butt, since multiple cache layers means multiple things you need to make sure are properly bypassed before you start digging in on your issue.

Between the cost and the complexity, it was time for a change. So we changed!

Leaping into the clouds, finally

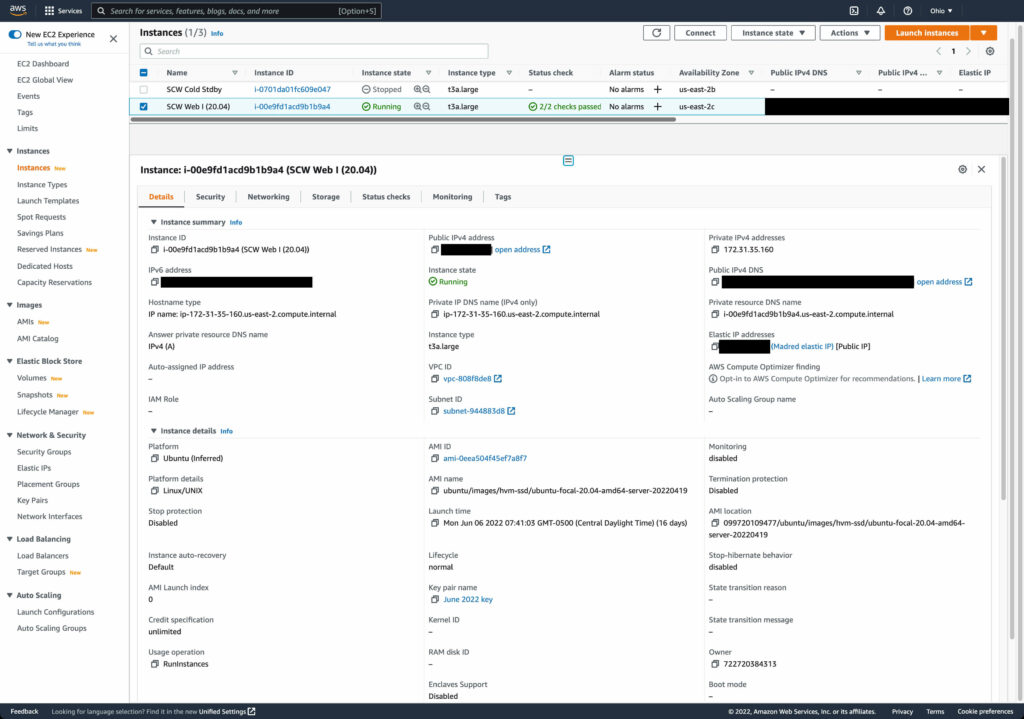

As of Monday, June 6, SCW has been hosted not on a physical box in Michigan, but on AWS. More specifically, we’ve migrated to an EC2 instance, which gives us our own cloud-based virtual server. (Don’t worry if “cloud-based virtual server” sounds like geek buzzword mumbo-jumbo—you don’t have to know or care about any of this in order to get the daily weather forecasts!)

Making the change from physical to cloud-based virtual buys us a tremendous amount of flexibility, since if we ever need to, I can add more resources to the server by changing the settings rather than by having to call up Liquid Web and arrange for an outage window in which to do a hardware upgrade. More importantly, the virtual setup is considerably cheaper, cutting our yearly hosting bill by something like 80 percent. (For the curious and/or the technically minded, we’re taking advantage of EC2 reserved instance pricing to pre-buy EC2 time at a substantial discount.)

On top of controlling costs, going virtual and cloud-based gives us a much better set of options for how we can do server backups (out with rsnapshot, in with actual-for-real block-based EBS snapshots!). This should make it massively easier for SCW to get back online from backups if anything ever does go wrong.

The one potential “gotcha” with this minimalist virtual approach is that I’m not taking advantage of the tools AWS provides to do true high availability hosting—primarily because those tools are expensive and would obviate most or all of the savings we’re currently realizing over physical hosting. The only conceivable outage situation we’d need to recover from would be an AWS availability zone outage—which is rare, but definitely happens from time to time. To guard against this possibility, I’ve got a second AWS instance in a second availability zone on cold standby. If there’s a problem with the SCW server, I can spin up the cold standby box within minutes and we’ll be good to go. (This is an oversimplified explanation, but if I sit here and describe our disaster recovery plan in detail, it’ll put everyone to sleep!)

Simplifying the software stack

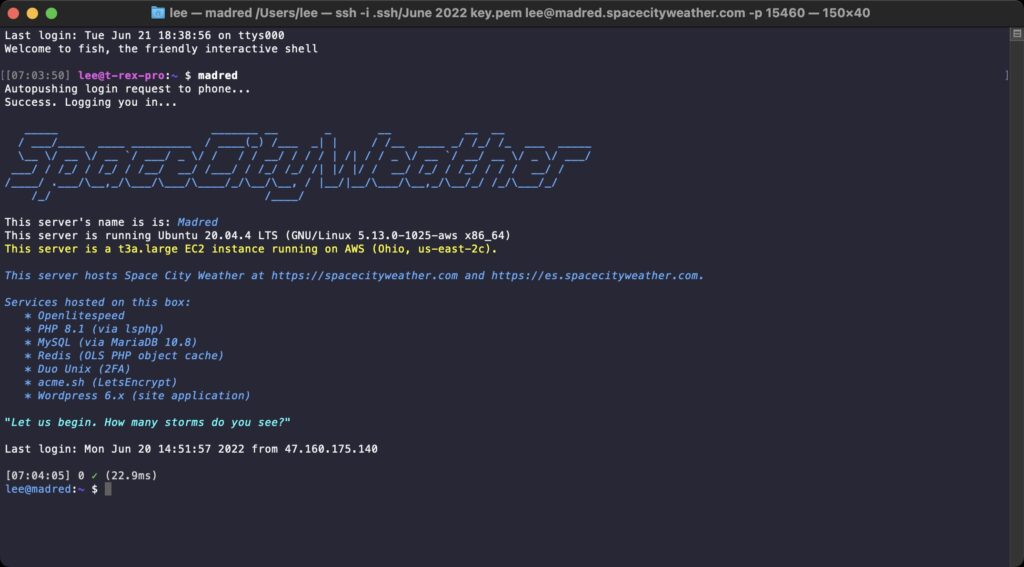

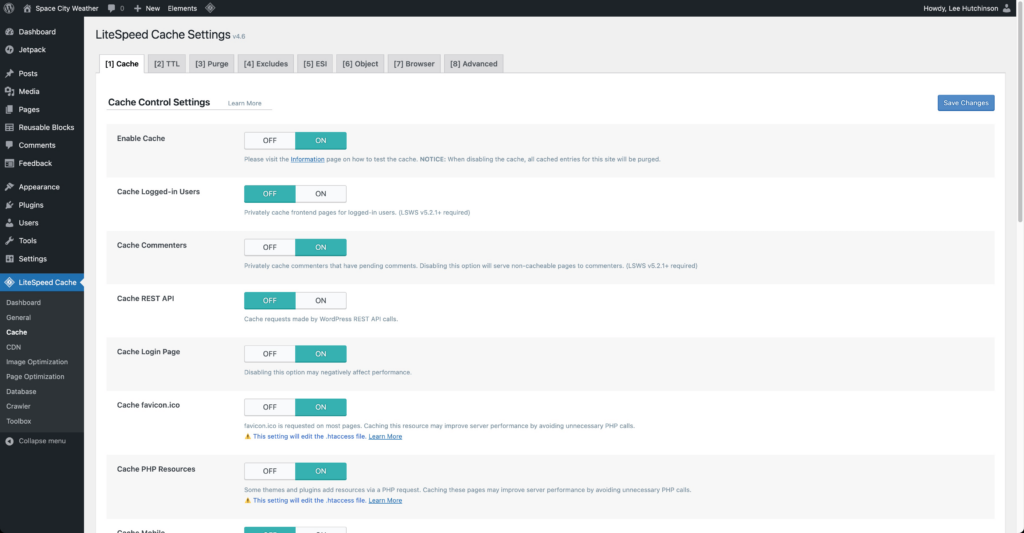

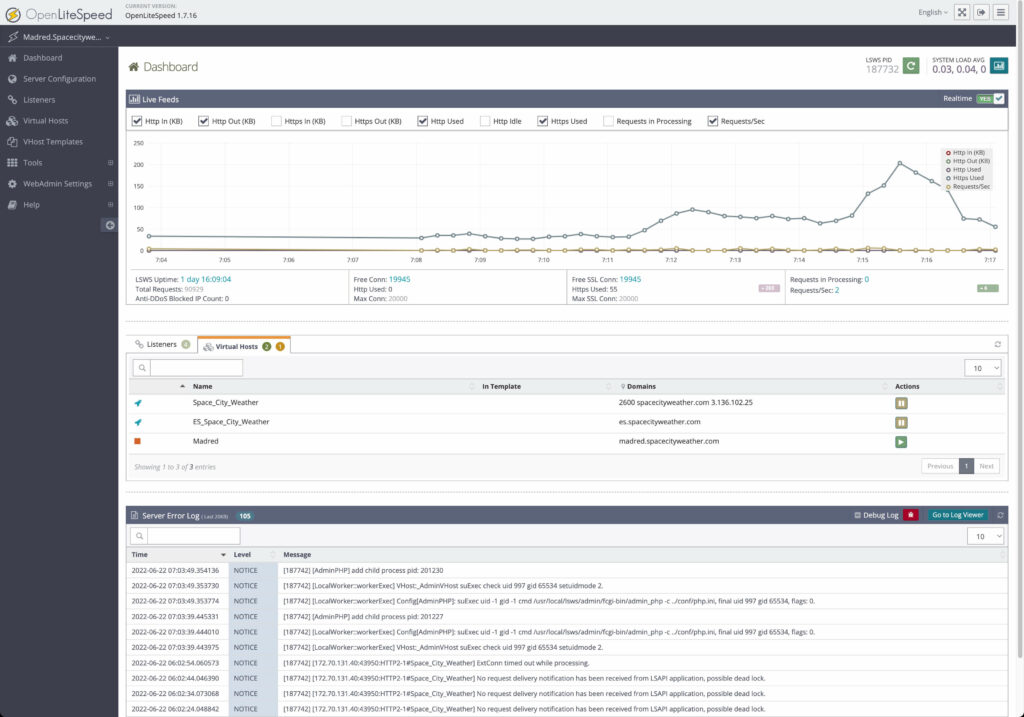

Along with the hosting switch, we’ve re-architected our web server’s software stack with an eye toward simplifying things while keeping the site responsive and quick. To that end, we’ve jettisoned our old trio of HAProxy, Varnish, and Nginx and settled instead on an all-in-one web server application with built-in cacheing, called OpenLiteSpeed.

OpenLiteSpeed (“OLS” to its friends) is the libre version of LiteSpeed Web Server, an application which has been getting more and more attention as a super-quick and super-friendly alternative to traditional web servers like Apache and Nginx. It’s purported to be quicker than Nginx or Varnish in many performance regimes, and it seemed like a great single-app candidate to replace our complex multi-app stack. After testing it on my personal site, SCW took the plunge.

There were a few configuration growing pains (eagle-eyed visitors might have noticed a couple of small server hiccups over the past week or two as I’ve been tweaking settings), but so far the change is proving to be a hugely positive one. OLS has excellent integration with Wordpress via a powerful plugin that exposes a ton of advanced configuration options, which in turn lets us tune the site so that it works exactly the way we want it to work.

Looking toward the future

Eric and Matt and Maria put in a lot of time and effort to make sure the forecasting they bring you is as reliable and hype-free as they can make it. In that same spirit, the SCW backend crew (which so far is me and app designer Hussain Abbasi, with Dwight Silverman acting as project manager) try to make smart, responsible tech decisions so that Eric’s and Matt’s and Maria’s words reach you as quickly and reliably as possible, come rain or shine or heatwave or hurricane.

I’ve been living here in Houston for every one of my 43 years on this Earth, and I’ve got the same visceral first-hand knowledge many of you have about what it’s like to stare down a tropical cyclone in the Gulf. When a weather event happens, much of Houston turns to Space City Weather for answers, and that level of responsibility is both frightening and humbling. It’s something we all take very seriously, and so I’m hopeful that the changes we’ve made to the hosting setup will serve visitors well as the summer rolls on into the danger months of August and September.

So cheers, everyone! I wish us all a 2022 filled with nothing but calm winds, pleasant seas, and a total lack of hurricanes. And if Mother Nature does decide to fling one at us, well, Eric and Matt and Maria will talk us all through what to do. If I’ve done my job right, no one will have to think about the servers and applications humming along behind the scenes keeping the site operational—and that’s exactly how I like things to be 🙂

Thanks for all you have done and continue to do. I read your forecast each day and trust you’ll for all my tropical updates.

I have absolutely no understanding of what you wrote 🥴, but I am SO thankful there is a committed team at SCW to help folks like me navigate the weather simply and effectively. Keep up thr great work. It’s much appreciated. 👍

Leslie…same. Still grateful for this team. Also, I have their wonderful insulated drinking glass. Love these guys.

Thank you for all you do to be part of the Team that keeps us all informed.

Joel in League City

Thank you! Appreciate communication!

I don’t pretend to understand any of what you have written in this post. I’m just happy to know that you, Matt, Eric and Maria are here keeping us all informed with no-hype forecasts, no matter what the weather. Thank you!

I wish us all a 2022 filled with nothing but calm winds, pleasant seas, and a total lack of hurricanes.

~~~

Amen to that! And thank you for the work you do.

Some of this went over my head, but thanks for sharing any way! I look forward to the no-hype forcasts. I appreciate everything y’all do.

Wow Lee, sounds like the wild HILife editor is carrying on our tradition of excellence. So proud of you! Ran into Long Chu c/o’89 at Astros game and he’s in charge of Arts, Culture etc in H-town. Thanks for all you do!

❤️ ❤️ ❤️ Ms. J!!! ❤️ ❤️ ❤️

❤️🐾

Thank you! I admit that I do not understand the technical information but I am impressed by your labor, your skills, your effort to inform, and your sense of responsibility to us readers.

Hey I really don’t understand much of the tech stuff, but I read through it all and tried to follow along with what I could. The tech crew’s work is greatly appreciated and all of these weather forecasts would not be possible without you guys. Y’all are an essential cog in the machine. Thank you very much, y’all.

Too tech for me but just another example of how y’all are always looking out for us! So glad you’re here.

Lee failed to mention that he is an incredible writer and happens to be the Senior Technology Editor over at Ars Technica. To read more of his work, go here:

https://arstechnica.com/author/lee-hutchinson/

Thank you for the kind words, John. Your check is in the mail 😉

Good gracious, it was ALL Greek to me! But thanks for knowing and implementing all this for your wonderful hype-free and amazingly accurate forecasts. Thanks also to the ever increasing crew and staffers that work together to bring us all this information to keep us safe.

Thanks for the update. Sounds like a plan.

Good post for a geek like me, a retired Systems Analyst. Thanks!

I as it takes a village to raise a child, it takes a team to effectively produce a web page and a blog. Thanks for being the technical side of the great forecasts Eric and Matt produce daily. I greatly appreciate all of you for being there each and every day.

LOVE this post!! I’m a digital content manager and I always try to learn as much from our website developer as possible so I can be a good client. Thank you for what you do, Lee! Eric, Matt and Maria, have you all thought about scaling SCW up to other cities with weather that relates to HOU, such as SA and AUS, even DFW? Of course that would require hiring fellow meteorologists who also have senses of humor (and finding site sponsors) but it would be very cool to see how the fronts coming down here from the north have already affected DFW and/or AUS, or for them to learn what the tropical system means for them. It sounds like SCW’s website could handle the additional traffic?

Thanks for making it work

For someone who knows absolutely nothing about what you posted, I found it very interesting. I just love SCW. Thank you for making it work so seemlessly

Great job not doing a “lift and shift” getting into public cloud. My wife and I are techies and we just want to vomit anytime a sales droid says that.

These are the only posts I’ll comment on as a peek into the backend is far more interesting to me! Also love the Cardassian namings!

Thanks to you Lee, and the other ‘behind the scenes’ folks, for all of your hard work!!

Um, you’re still hanging out a window with a barometer, aren’t you?

Thanks so much for the detailed behind the scenes information. I am far from a tech nerd, but I do love knowing how things work.

Brenda

Nice explanation! I’m an IT guy, but web hosting is not my bailiwick. I am taking some online training for WordPress, and have learned how much goes into hosting websites. This is not for the faint of heart! Good job!

It can be complex! But that can be fun, too—complex problems can mean opportunities for creative problem solving with fun tools that you might not otherwise have an excuse to mess with! 😀

I had no idea that hosting a website was so complicated! Thanks for taking the time to explain it, and thanks also for all of your hard work.

Speaking as a Cloud Engineer who actually understood 95% of what you talked about (well, not some of the web server specifics – I’m an infrastructure guy)n, I might ask you why not host it in Azure… except I know exactly why you wouldn’t host a site this comparatively small in Azure. Any thoughts, though, to multi-cloud hosting, as a backup to your backup?

Also, I’m a little wary of some of the details you left unredacted in the AWS console pic. You’ve given out the starting OS version and distro of the host, so any would-be attacker could narrow down their list of attack vectors significantly.

I’m not terribly worried about basic details like that—our attack surface is reasonably narrow, and security through obscurity as a primary defense is always bound to fail. (Nothing wrong with including it as a small part of a defense-in-depth strategy, though.)

Re: multi-cloud hosting — If I had the skill and the budget, I’d set up cross-region EBS replication from snaps so that the box’s volumes are constantly getting shipped to a different region, and then stand up a hot-standby EC2 host in that region. Throw a load balancer in front and bind the elastic IP to it, and there’s my quick-n-dirty high availability setup.

I can’t think of any potential disaster that would screw up two geographically separated AWS regions that wouldn’t also be world-ending, heh. If the space asteroid takes out AWS Ohio and AWS Virginia, we have larger problems than the kind that SCW can help with 😀

I do appreciate that you host it out of the gulf region. My stupid company in Houston also hosts everything locally. So if something hits the region it also takes down the datacenter.

Yikes, yeah, I would call that strategy not conducive to maximum uptime.

As a 20+ year IT guy I most appreciate the details. Many folks think the cloud is a place to store your pics and iPhone backups. So giving a glimpse into what the cloud really does for everyone is awesome!

Thank you for showing us this part. I am grateful for your daily email, posts, web updates!

Very good technical info ! As a very experienced(20 +++++) IT’er, it is good to hear how much your setup is well positioned for the future. Keep up the good work !

Thank you guys!

What is your observability stack? Grafana / Prometheus / Loki would be absolutely perfect for this and it looks like there is a litespeed prometheus metrics exporter already ready to go.

My observability stack is “logging in and looking at log files manually” 😀 Some kind of central solution would be smart, but there are so many other things to do, sadly. Maybe one day!

Kudos to the whole team – Happy to hear about your upgrade. It’s a blessing to have the dependable weather info SCW provides. Many thanks, Susan Baker

Thanks for the post – good stuff! I feel for the guys – nothing to say but the weather forecast from Good Morning Vietnam, so you need to distract us with shiny objects!

I’m not very tech savvy, but I figure I owe it to you to read about your work since Space City Weather can’t do it without you. I am from Texas, live on the Plains now, and have family that is affected by your weather reports in and around Houston. Tell your mother that this (grand)mother is thankful for the work that you do!

Are you still on MariaDB or did you switch to RDS?

Stuck with mariadb running on the EC2 host, because as near as I can tell from some reading, at the scale we’re operating at there’d be very little benefit and I’d be paying for an RDS instance on top of the EC2 instance. Running mariadb on-box maximizes the utility of the single t3a.large i’m on by giving it a little more to do.

If I were doing this with an eye to real HA or even full fault tolerance, i’d be much more inclined to use RDS.

Terrific – and I understood a good bit of what you wrote. Thanks so much. I feel safe with Eric, Matt, Maria, Dwight, and you taking care of my weather info.

Neat! [takes picture]

Solid stack and setup. I especially love the ascii shell banner you got going on there 🙂

Dang if that wasn’t more interesting than it should have been! Thanks for sharing.

I didnt understand anything you wrote about, but thank you for the great, accesible information!

Fascinating! Thanks for all the tech explanations.

Where do I make comments about the IOS App? The latest weather update I see there is from June 9th. I’ve got version 1.5.143 on my phone.

I’m glad to see I’m not the only one who didn’t understand a thing in the article.

I’m still in recovery from all the carnage DOS 5 caused us at work.

“Doublespace? Don’t mind if I do!”